10.2. Expectation and Variance#

If a random variable \(X\) has density \(f\), the expectation \(E(X)\) is defined by

This is parallel to the definition of the expectation of a discrete random variable \(X\):

Technical Note: Not all integrals are finite, and some don’t even exist. But in this class you don’t have to worry about that. All random variables we encounter will have finite expectations and variances.

If \(X\) has density \(f\) then the expected square \(E(X^2)\) is defined by

This is parallel to the definition of the expected square of a discrete random variable \(X\):

Whether \(X\) has a density or is discrete, the variance of \(X\) is defined as

and the standard deviation of \(X\) is defined as

Properties of expectation and variance are the same as before. For example,

Linear functions: \(E(aX+b) = aE(X) + b\), \(SD(aX+b) = \vert a \vert SD(X)\)

Additivity of expectation: \(E(X+Y) = E(X) + E(Y)\)

Independence: \(X\) and \(Y\) are independent if \(P(X \in A, Y \in B) = P(X \in A)P(Y \in B)\) for all numerical sets \(A\) and \(B\).

Addition rule for variance: If \(X\) and \(Y\) are independent, then \(Var(X+Y) = Var(X)+Var(Y)\)

The Central Limit Theorem holds too: If \(X_1, X_2, \ldots \) are i.i.d. then for large \(n\) the distribution of \(S_n = \sum_{i=1}^n X_i\) is approximately normal.

So if you are working with a random variables that has a density, you have to know how to find probabilities, expectation, and variance using the density function. After that, probabilities and expectations combine just as they did in the discrete case.

10.2.1. Calculating Expectation and SD#

Let \(X\) have density given by

As we saw in the previous section, the density of \(X\) is symmetric about \(0.5\) and so \(E(X)\) must be \(0.5\). This is consistent with the answer we get by applying the definition of expectation above:

To find \(Var(X)\) we start by finding \(E(X^2)\). We’ll speed up the calculus as it is similar to the above.

So

and

0.05 ** 0.5

0.22360679774997896

10.2.2. Uniform \((0, 1)\) Distribution#

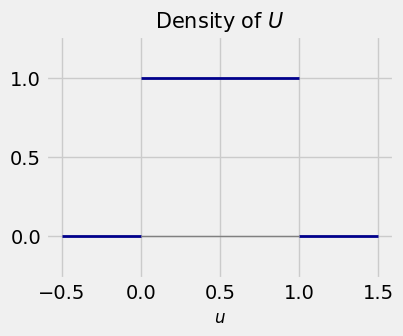

A random variable \(U\) has the uniform distribution on the unit interval \((0, 1)\) if its density \(f\) is constant over the interval:

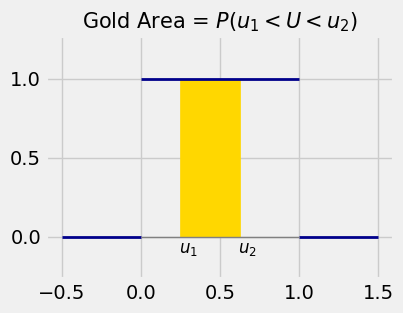

The probability of an interval is the length of the interval. For \(0 < u_1 < u_2 < 1\),

This is the area of the gold rectangle in the figure below.

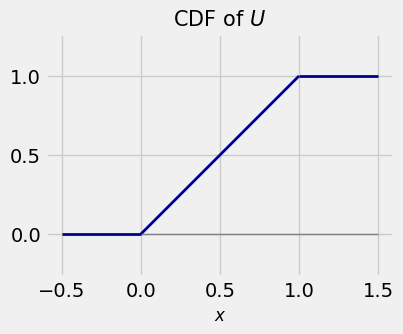

The cdf of \(U\) is given by:

\(F(x) = 0\) for \(x \le 0\)

\(F(x) = P(0 < U \le x) = x\) for \(0 < x < 1\)

\(F(x) = 1\) for \(x \ge 1\)

Clearly \(E(U) = 0.5\) by symmetry, and

So

and

10.2.3. Uniform \((a, b)\) Distribution#

For any \(a < b\), the random variable \(X\) has the uniform distribution on the interval \((a, b)\) if its density is constant over the interval. The total area under the density has to be 1, so the density function is given by

The probability of an interval is its relative length: for \(a < x_1 < x_2 < b\),

By symmetry, \(E(X)\) is halfway between \(a\) and \(b\):

No integration is needed for the variance either, because you can write \(X\) as a linear function of \(U\) where \(U\) is uniform on \((0, 1)\). Both have flat densities, so you can get from one to the other by stretching and shifting the values appropriately:

The random variable \((b-a)U\) has the uniform distribution on \((0, b-a)\).

The random variable \((b-a)U + a\) has the uniform distribution on \((a, b)\).

Conversely \(X - a\) has the uniform distribution on \((0, b-a)\), and \(\frac{X-a}{b-a}\) has the uniform distribution on \((0, 1)\).

Thus if \(X\) is uniform on \((a, b)\) then

where \(U\) is uniform on \((0, 1)\). So

and